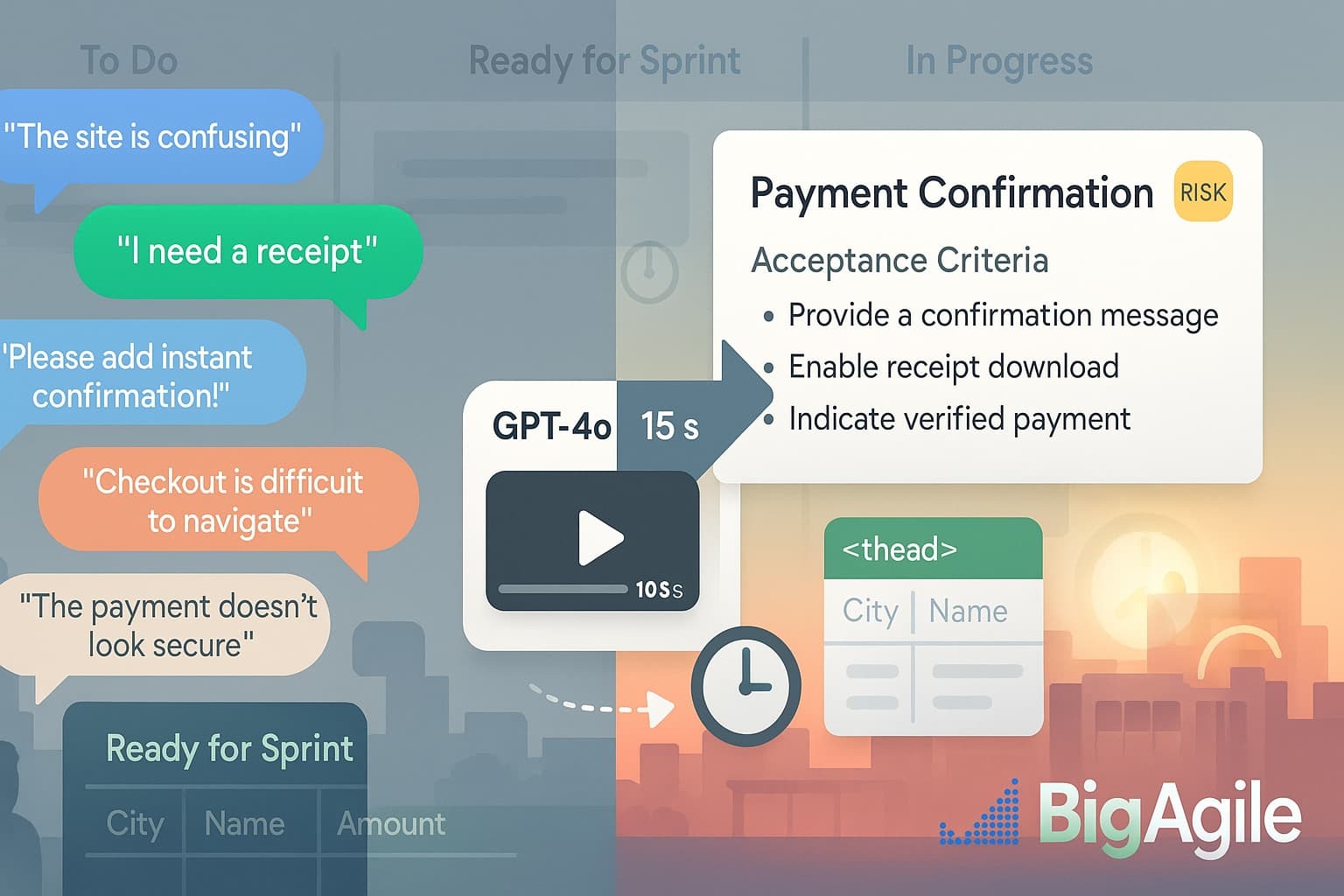

Backlog refinement often stalls because teams spend precious time translating customer feedback into clear, testable work items. Used thoughtfully, AI can accelerate that step and free people to focus on the judgment calls only humans can make, prioritizing value, negotiating trade-offs, and validating outcomes.

This post marks the beginning of a short series on practical AI support for product development. Today we’ll show how to convert a real customer quote into a well-formed user story, acceptance criteria, and initial risk flag in seconds, giving your team a head start on the conversations that matter most.

The Pain.

Refinement sessions often stall during translation. Stakeholders share raw anecdotes; Product Owners rephrase them into “As a user” templates; developers speculate about edge cases. LinearB’s 2025 benchmarks indicate that this back-and-forth takes up about 40% of a typical team’s refinement time and still leaves gaps that lead to rework later. Ambiguous backlog items increase cycle time, raise risk, and reduce confidence because no one is sure they’re building the right thing.

The Bet.

When AI creates the first draft, teams can use their limited face-to-face time for judgment, prioritization, and trade-offs. Inputting a customer quote into GPT-4o generates a concise user story, three testable acceptance criteria, and an initial risk flag in less than a minute. This jump-start shifts the focus from a transcription task to a discussion on value and feasibility. The expected benefits are shorter meetings, clearer backlog items, faster developer onboarding, and far fewer “What did this story really mean?” moments during a sprint. I've seen plenty of that!

Proof in One File.

Everything runs through a single script, quote2story.py, so teams can test the workflow without setting up new infrastructure.

The script takes a plain-text customer quote, calls GPT-4o with a structured prompt, and returns JSON containing the user story, acceptance criteria, and a risk rating. It then adds that output as a new row in the backlog table stored in Confluence (or any wiki that can display raw HTML).

No databases, webhooks, or plug-ins are needed, just a token for the OpenAI API and the page URL of your table. The same script can be triggered from a slash command in Slack, a button in your backlog tool, or a nightly batch that processes a queue of new quotes. By keeping the proof point in a single, self-contained file, you obtain an auditable record of each AI suggestion, an easy rollback option if the team requires revisions, and a lightweight way to measure the time saved versus your current refinement process baseline.

What we stitch together

quote2story.py,a 50-line wrapper around OpenAI’s chat API.- Quote → Outcome HTML table, lives in Confluence; the script appends a new row on every run.

- Risk tag bot, reads GPT’s risk score; labels the card “🟢 Low,” “🟡 Medium,” or “🔴 High.”

#!/usr/bin/env python3

"""

Convert a raw customer quote into:

• User story

• 3 acceptance criteria

• Risk flag

Append the result to an HTML backlog table.

"""

import os, json, requests, bs4, openai

openai.api_key = os.getenv("OPENAI_API_KEY")

TABLE_URL = os.getenv("QUOTE_TABLE_URL") # raw HTML file in wiki

PROMPT = """

You are a Product Owner. Transform the customer quote below into JSON with:

story: user story in 1 sentence

criteria: list[3] of Given/When/Then

risk: Low|Medium|High

"""

def to_table_row(quote, data):

html = requests.get(TABLE_URL).text

soup = bs4.BeautifulSoup(html, "html.parser")

tbody = soup.find("tbody")

row = soup.new_tag("tr")

for cell in (quote, data["story"],

"

".join(data["criteria"]), f"{data['risk']}"):

td = soup.new_tag("td"); td.string = cell; row.append(td)

tbody.append(row)

open("quote_table.html", "w").write(str(soup)) # commit back via CI

def main(quote: str):

rsp = openai.ChatCompletion.create(

model="gpt-4o",

messages=[{"role":"system","content":PROMPT},

{"role":"user","content":quote}])

data = json.loads(rsp.choices[0].message.content)

to_table_row(quote, data)

print("✅ Story added:\n", json.dumps(data, indent=2))

if __name__ == "__main__":

main("I never know if my payment went through until three emails later.")

| Customer Quote | AI Generated Story | Acceptance Criteria | Risk |

|---|---|---|---|

| “I never know if my payment went through until three emails later.” | As an online shopper, I want a single confirmation screen so I know my payment succeeded without waiting for emails. | 1. Given a successful transaction, When payment completes, Then show confirmation within 2s. 2. Given a failed transaction, When payment declines, Then display error and retry options. 3. Given confirmation, When user revisits order history, Then status shows “Paid”. | 🟡 Medium |

Refining the backlog shouldn’t feel like decoding hieroglyphics. By letting AI handle the first translation pass from raw customer words to clear, testable stories; we gain time for the conversations that truly need human brains: sizing risk, aligning priorities, and validating real value.

Drop the script, review the output together, adjust what matters, and watch idle refinement melt away. This is not meant to take the place of conversations with the team! Tomorrow we’ll build on this with AI-assisted risk poker, so keep your quotes coming and your table open.