🚀 The Problem with “AI Project” Thinking

Most organizations fail with AI for a common reason: they treat it as a project rather than a capability. In my last YouTube Video, we talked about this topic to introduce the fundamental problem. I want to dive a bit deeper into this with this post.

I see organizations build pilots, hire data scientists, and launch initiatives with deadlines and deliverables, but no continuous learning loop. And the results speak for themselves: billions of dollars spent on proofs of concept that never scale, models that become outdated before production, and dashboards no one uses.

AI doesn’t fail because of bad technology. It fails because of a rigid strategy, a belief that we can predict value before we learn it. Sounds like a familiar problem that spawned the iterative and incremental development movement of the late 1980s (a.k.a. Agile).

If agility taught us anything, it’s this: the real differentiator isn’t who builds faster. It’s who learns faster.

🔄 Strategy as a Rolling Hypothesis, Not a Fixed Plan

Traditional strategic planning assumes stability. But AI is a paramount example of uncertainty. The best risk mitigator is a constant feedback cycle between data, action, and outcome.

That means leaders must treat AI strategy as a rolling hypothesis, not a static roadmap (or worse, a traditional budgeted endeavor like our old software projects of the 1970s).

Instead of:

“We’ll deploy three AI models by Q4.”

Try:

“We’ll test three decision hypotheses and scale what proves measurable value.”

Agile strategy cycles shift leadership focus from control and prediction to experimentation and learning. Each quarter becomes a feedback loop, a chance to inspect the results, adapt the hypotheses, and reallocate the investment.

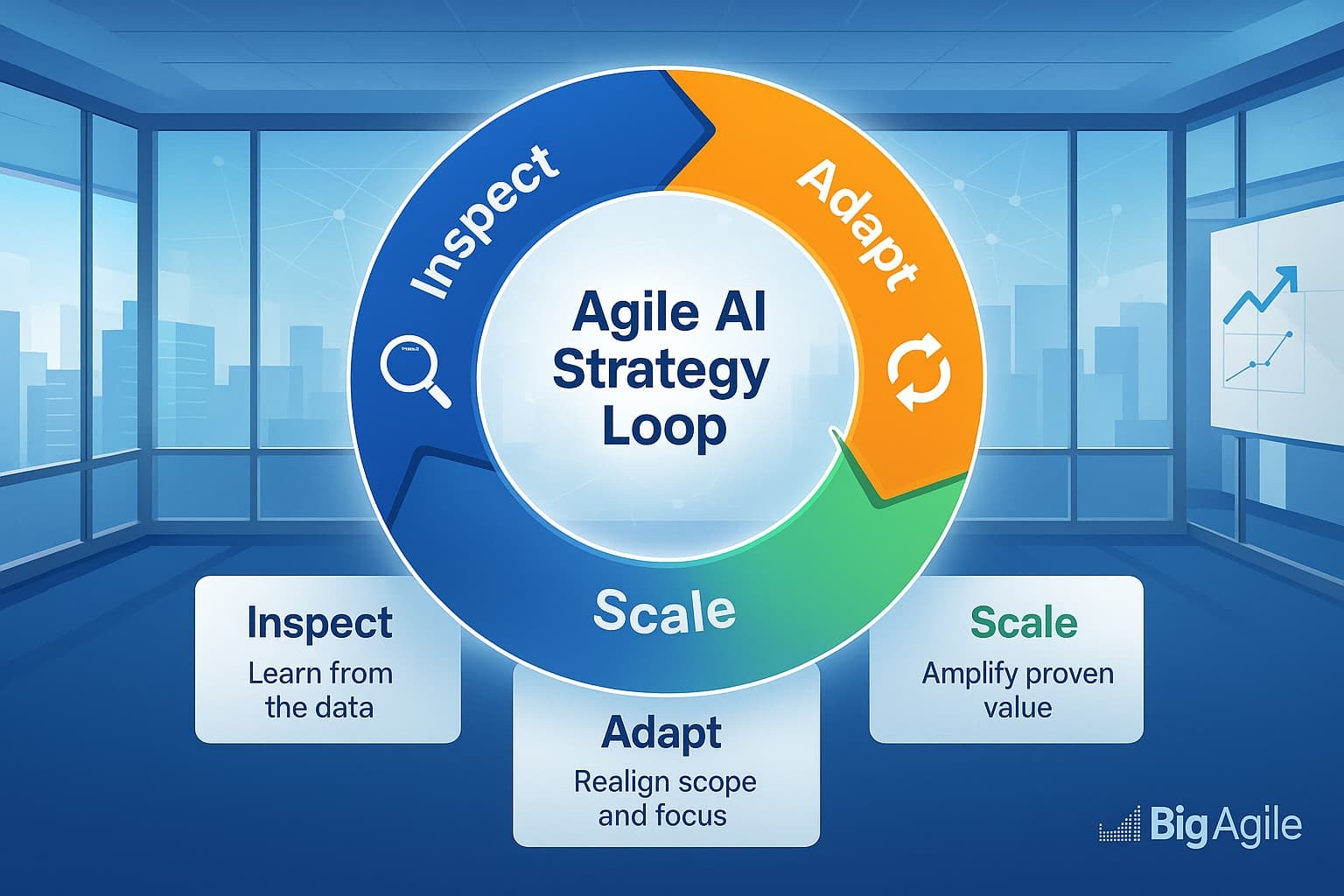

💡 Quick Framework: The Agile AI Strategy Loop

| Phase | Key Question | Goal |

|---|---|---|

| Inspect | What assumptions did our last cycle prove or disprove? | Learn from the data; surface signals vs. noise. |

| Adapt | What adjustments or pivots are needed now? | Realign scope, resources, and risk; set the next bet. |

| Scale | What validated initiatives can we expand safely? | Amplify proven value; harden governance and rollout. |

The beauty of this approach? It creates strategic agility, the ability to steer AI investments dynamically without falling into chaos.

⚙️ Using Sprint-Based Experimentation to Test Assumptions

Most AI projects die in the gap between idea and integration. Teams build models that technically work but don’t connect to real decisions or outcomes, or, worse, don’t provide any governance or oversight for the models.

To close that gap, apply sprint-based experimentation:

Treat each model or automation as a time-boxed hypothesis.

Design experiments that deliver feedback within 2–4 weeks.

Evaluate results against the decisions they were meant to improve.

For example, instead of asking, “Can AI predict customer churn?”, reframe it as:

“Can we improve our customer retention decisions by 10% through AI-driven signals within one sprint?”

That subtle shift turns AI from a technology investment into a learning investment.

🧠 Example: Shifting from an AI Pilot to a Sustainable Capability

A national retailer recently launched an AI pilot to forecast regional inventory demand. The model performed well in a lab. But once deployed, local managers ignored it.

Rather than declaring failure, leadership restructured the approach:

Short sprint cycles focused on learning outcomes, not accuracy percentages.

Each region tested one hypothesis per sprint: “Will predictive restocking reduce stockouts?”

Weekly retros captured feedback from both data and human decision-makers.

Lessons were shared, not buried, and the model evolved from a centralized to an adaptive one.

Six months later, the team wasn’t just using AI; they were learning through it. Stockouts decreased by 14%, and regional managers shared ownership of the capability. That’s what agile strategy looks like: an evolving system, not a static plan.

📊 Metrics That Matter: Measuring Learning, Not Just Delivery

The most advanced organizations don’t measure AI success by the number of models deployed, but by the speed and quality of learning.

Throughput: How many validated experiments can we complete per quarter?

Feedback Velocity: How quickly do insights from AI loops inform real decisions?

Learning Value: How often do learnings lead to measurable outcome improvement (e.g., cost reduction, faster decision-making, improved customer experience)?

When these metrics improve, your organization is developing AI learning velocity, the leading indicator of future ROI.

🔁 The Real ROI of AI

The real ROI of AI doesn’t come from models that predict outcomes. It comes from organizations that learn how to adapt to what those outcomes reveal. AI becomes sustainable when it’s embedded in a feedback system, not a budget line.

To achieve agility in your AI investments, establish a cadence around learning, not delivery. Make your strategy cyclical, not linear. And remember: the goal isn’t automation, it’s adaptation.

📣 Next Step

Join us Friday for the LinkedIn Newsletter:💡 “Adaptive Strategy: How to Turn AI Uncertainty into Business Advantage.”

We’ll explore how leaders govern AI investments with agility, striking a balance between innovation, ethics, and long-term value creation.