- Have OpenAI write the initial Given/When/Then statements, then let your developers critique them before Sprint Planning even begins.

Why This Matters.

First-pass stories are useful, but testable stories are priceless. Acceptance criteria turn a polite request (“Let patients upload labs”) into a measurable goal (“Given a 10 MB PDF on mobile …”). When criteria are clear before work starts, developers code with confidence, testers automate earlier, and the product team has a clearer understanding of what “done” means for the user. However, the criteria also require significant mental effort, as they necessitate domain knowledge and wording. Automating the first draft enables the product team to focus on details, such as edge cases, UX nuances, and regulatory issues, rather than struggling with Given/When/Then syntax.

An added benefit is psychological safety: developers join refinement sessions knowing someone has already considered failure scenarios; testers begin a sprint feeling their concerns have been acknowledged; the PO stops worrying about the “do we even know what done means?” question in planning. The team can dedicate energy to creative problem-solving instead of clarifying first principles. This is especially important in distributed teams; a Slack Testers thread gives people across different time zones an equal chance to ask questions before coding begins, rather than discovering misalignment after an overnight merge.

The Pain.

Most product owners admit they spend more time writing acceptance criteria than creating stories; often 20-30 minutes per ticket if done well. I believe POs should come prepared with some AC, but I am also fine with them evolving in refinement, planning, and working. However, under tight deadlines, that time disappears, and the criteria become vague, such as “Upload works.” Developers then begin Sprint Planning with a flood of clarifying questions, which slow down estimation. These gaps ripple downstream: testers quickly patch test cases mid-sprint, file bugs for scenarios that should have been caught earlier, and the cycle-time graph shows a new orange segment called “rework review.” You know the rest...

The problem isn’t just wasted time, necessarily. Unclear criteria damage trust. Engineers feel they’re coding on shifting sands; product leaders worry about releasing incomplete features; testers are seen as “the delay.” In regulated fields like healthcare and fintech, vague acceptance criteria can lead to compliance issues that trigger executive escalations. Every sprint includes hidden wait times where someone asks, “What does ‘error handled gracefully’ actually mean?” and waits hours for an answer. Multiply that across 30 stories, and you’re looking at days of idle effort.

The Bet.

Our wager is simple: if a bot can draft three clean Given/When/Then lines in under sixty seconds, then a PO’s half-hour becomes a five-minute review, and the 25 minutes saved per story can be used for deeper collaboration. The bot is intentionally opinionated; it always provides three criteria and three edge cases, forcing the PO and developers to think, “Do we really care about that edge case? Are we missing one?” This prompts earlier discussions, not later bug reports.

By embedding the criteria in a Slack thread immediately after generation, we transform the review process into an asynchronous, low-friction experience. Developers question assumptions while their context is current; testers refine test data before Sprint Planning; the PO updates the ticket in real time. The ✅ reaction isn’t just a cute signal; it’s a lightweight contract that the story is now INVEST-ready. We anticipate that planning meetings will be reduced, story defects will be identified days earlier, and test automation will start on Day 1 instead of Day 3.

The bot minimizes manual work, allowing the team to retain judgment.

What You Need.

To set up the Acceptance-Criteria workflow, you need three simple components. First, a backlog export that already includes the AI tags we’ve been using, so the script knows which stories to process. Second, access tokens for OpenAI and Slack, allowing the bot to draft criteria and post threads where your team collaborates. Finally, a lightweight Python helper (plus pandas and schedule) to fetch new tickets on a timer and send JSON data into your existing triage channel.

With these pieces in place, everything else is straightforward .

- ai_tags.csv: backlog IDs + summaries.

- OpenAI API key (GPT-4o).

- Slack bot token scoped to your backlog channel.

- ac_bot.py (below) + schedule lib for optional hourly runs.

Step By Step.

| Step | Action | Output |

|---|---|---|

| 1 – Fetch new stories | Script reads Jira for tickets in “Ready for AC”. | List of story IDs. |

| 2 – Call GPT-4o | Prompt returns JSON: 3 criteria + edge-case list. | AI acceptance-criteria bundle. |

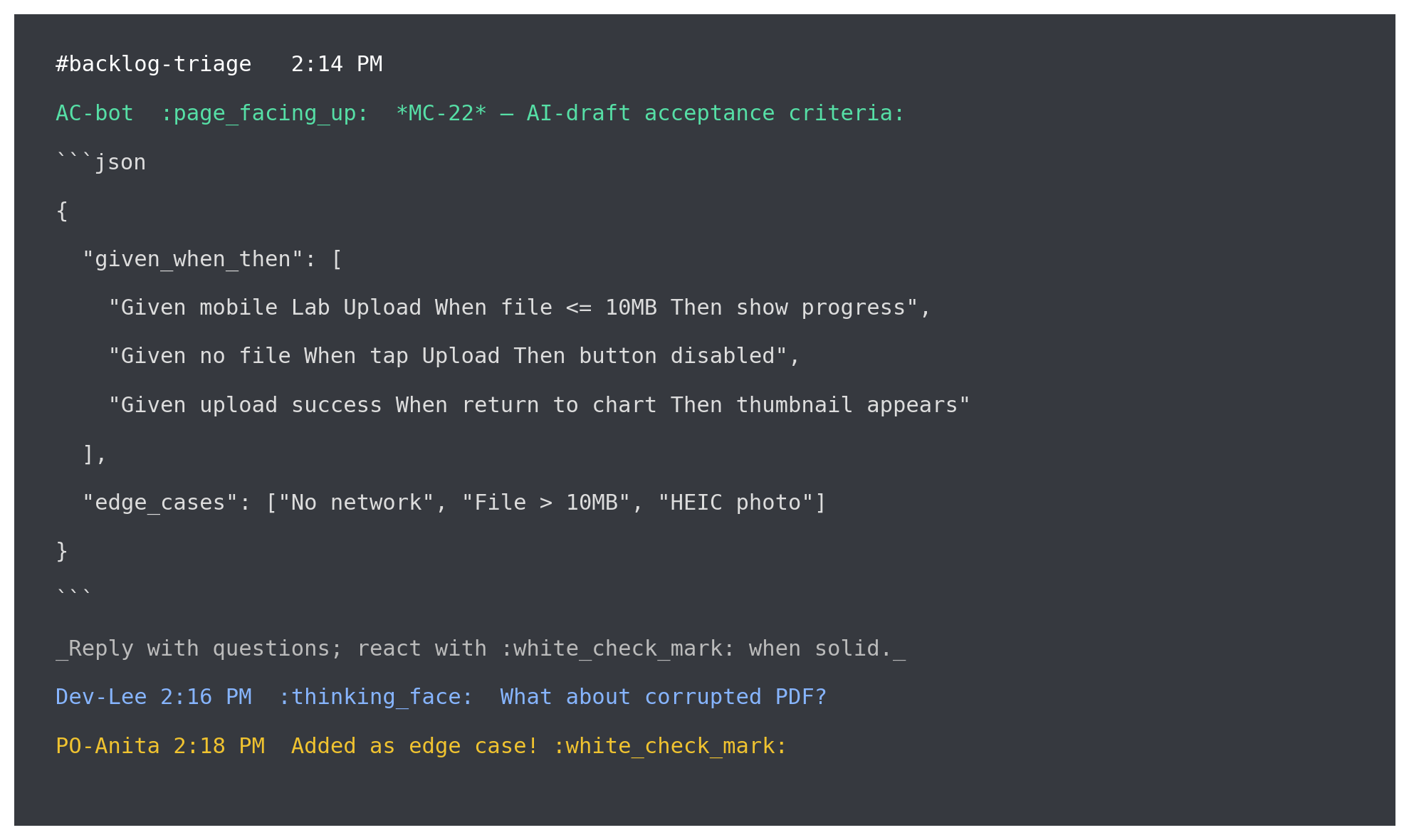

| 3 – Post to Slack | Bot threads the JSON under the story link in #backlog-triage. | Devs & QA reply with emojis or questions. |

| 4 – Refine in thread | PO edits criteria in Jira until dev lead reacts ✅. | Criteria locked before Sprint Planning. |

Prompt Template

You are a senior QA engineer.

For the user story below, return JSON:

given_when_then : list[3] strings formatted “Given … When … Then …”

edge_cases : list[3] short bullet edge cases

Story: "{summary}"

Slack Q&A Loops

#!/usr/bin/env python3

# pip install slack_sdk openai schedule pandas

import os, openai, pandas as pd, schedule, time

from slack_sdk import WebClient

SLACK = WebClient(token=os.environ["SLACK_BOT_TOKEN"])

openai.api_key = os.getenv("OPENAI_API_KEY")

CHANNEL = "#backlog-triage"

def generate_ac(summary):

prompt = f"""You are a senior QA engineer.

Return JSON with given_when_then (3) and edge_cases (3) for:

"{summary}" """

rsp = openai.ChatCompletion.create(

model="gpt-4o",

messages=[{"role":"user","content":prompt}],

temperature=0.3)

return rsp.choices[0].message.content

def post_thread(row):

story_id, summary = row["ID"], row["Summary"]

ac_json = generate_ac(summary)

msg = (f"*{story_id}* — AI-draft acceptance criteria:\n```{ac_json}```\n"

"_Reply with questions; react with :white_check_mark: when solid._")

SLACK.chat_postMessage(channel=CHANNEL, text=msg)

def run_once():

df = pd.read_csv("ai_tags.csv")

df.apply(post_thread, axis=1)

schedule.every().hour.do(run_once)

run_once() # fire on start

while True:

schedule.run_pending()

time.sleep(60)

Sample AI Output

| Story ID | Given / When / Then (AI Draft) | Edge Cases (AI Suggests) |

|---|---|---|

| MC-22 | 1. Given the mobile Lab Upload screen When a file ≤ 10 MB is selected Then a progress bar shows until success. 2. Given no file chosen When the Upload button is tapped Then the button stays disabled. 3. Given upload success When the user returns to results Then a thumbnail appears in the chart. | • No network connection • File > 10 MB • HEIC photo format |

| MC-12 | 1. Given a confirmed appointment When 24 h remain Then a push notification is sent. 2. Given notification settings disabled When 24 h remain Then only an email reminder is sent. 3. Given a sent push When the patient opens it Then the app navigates to appointment details. | • Time-zone mismatch • Device push permissions denied • Appointment rescheduled inside 24 h |

What Good Looks Like.

Each row in the table represents a story-level "contract". The Given / When / Then column outlines the AI’s initial test scenarios: context (Given), trigger (When), and expected system response (Then). Treat these as conversation starters rather than definitive answers, developers and testers should review for missing steps or ambiguous language and respond in the Slack thread.

The Edge Cases column highlights situations the AI considers could break the happy path; the team decides which cases belong in this sprint and which should be deferred to technical debt or spikes. Your steps: verify that each criterion is objectively testable, add or remove edge cases as needed, and react with ✅ once the story is truly INVEST-ready. What not to do: accept the draft without question or inflate the list with “nice-to-haves” that turn a one-sprint task into a mini-epic.

Move quickly, commit, and let testers script tests on day one. Criteria should be drafted in under 60 seconds per story; PO time is saved and reinvested into discovery. Slack threads address edge cases days earlier, reducing Sprint Planning by another 10 minutes. Testers writes tests on Day 1, because criteria are some-what locked, not “TBD.”

- AI writes; the squad sharpens. Less typing, more negotiating quality.