Cluster backlog items, surface hidden blockers, and walk into the workshop with a clear picture instead of a guess.

Why This Matters.

A quarterly roadmap isn’t a promise; it’s a conversation starter about sequencing, risk, and strategic bets. Yet, most teams still create it in a spreadsheet jungle where cross-team dependencies are hidden behind filter views. A first-pass map drawn by AI provides everyone with a shared picture in minutes, so the meeting can start with discussing real trade-offs, rather than assembling sticky notes.

The Pain.

Manually grouping 60+ backlog items into themes, identifying dependency chains, and reshuffling when a new blocker appears can consume two sprints’ worth of PO and Dev time. The bigger the org, the more spreadsheets, the more stale data. By the time the deck reaches execs, half the links are wrong, and trust erodes.

The Bet.

Feed AI a backlog export; let a three-step prompt chain:

- Cluster stories by outcome theme,

- Infer blocking relationships (A → B → C),

- Drop output into Mermaid syntax.

Paste that text into any Markdown viewer, and the roadmap diagram renders instantly. Humans then validate:

- “Can B really start before C?”

- “Is security review a true blocker?”

The AI handles the heavy grouping; the team owns the final call.

Inputs You Need.

CSV columns:

ID, Summary, TargetQuarter, Tags. See our sample Jira File.Prompt chain (below) stored in

chain.yamlor pasted into ChatGPT.Python helper (

roadmap.py) writes Mermaid from AI JSON.Mermaid live editor (https://mermaid.live) or any MD renderer in Confluence, GitHub, Notion.

Step By Step

| Step | Action | Output |

|---|---|---|

| 1 - Export backlog | Run Jira filter → CSV with ID & summary columns. | Raw data file. |

| 2 - Run prompt chain | Feed CSV rows to GPT-4o using the 3-stage YAML chain. | JSON clusters + dependency list. |

| 3 - Generate Mermaid | `python roadmap.py ai_output.json` | `roadmap.md` with Mermaid graph. |

| 4 - Paste & review | Drop Markdown into Confluence or Mermaid Live; walk the team through arrows. | Collaborative roadmap workshop. |

Prompt Chain

Here is the prompt chain YAML file.

- role: system

content: |

You are a seasoned Product Strategist.

- role: user

content: |

1. Cluster backlog items by outcome theme (max 6 themes).

2. Within each theme, list items in likely execution order.

3. Return JSON {theme:{items:[{id,summary,blocks}]}} where “blocks”

is array of IDs that must finish first.

Backlog:

{{CSV_ROWS}}

Roadmap Python Script

Here we will run a python script (roadmap.py).

#!/usr/bin/env python3

# pip install pandas

import pandas as pd, json, sys, textwrap

data = json.load(open(sys.argv[1]))

lines = ["```mermaid", "graph LR"]

for theme, blob in data.items():

lines.append(f" subgraph {theme}")

for itm in blob["items"]:

nid = itm["id"]

lines.append(f" {nid}[{itm['summary']}]")

lines.append(" end")

for itm in blob["items"]:

for dep in itm["blocks"]:

lines.append(f" {dep} --> {itm['id']}")

lines.append("```")

open("roadmap.md","w").write("\n".join(lines))

print("✅ roadmap.md ready")

How the chain determines “what comes before what”

Clustering by outcome theme

The first prompt asks GPT-4o to scan each backlog summary for keyword,“notification,” “messaging,” “lab upload,” “infra,” etc.and group items into no more than six themes. This keeps the graph clear and mimics how humans mentally categorize work (Patient Delight, Infrastructure, Engagement).

Inferring dependencies

In the second stage, the prompt instructs GPT to look for functional prerequisites within each theme and across themes:

- verbs like “enable,” “depends,” “after,” “requires”

- tags or IDs that suggest sequencing (e.g., media server before secure messaging)

- common sense in domain knowledge (infra before features, core API before UI polish).

The model produces a blocks array for each item—the IDs that must be completed first. Think of it as a lightweight DAG (directed acyclic graph).

Ordering items topologically

Within each theme, items are arranged in an order that respects all blocks relationships. GPT performs a straightforward topological sort:

- If A blocks B, A appears higher in the JSON list.

- Items without incoming arrows float to the top.

- Ties (no direct dependency) stay in the CSV order to preserve any product owner intuition.

A Python script visualizes the AI's decision

roadmap.py reads the JSON and outputs Mermaid:

- Each subgraph is displayed in the sequence GPT generated—no additional sorting.

- For every dep → item pair, an arrow is added.

- Cross-theme arrows run left-to-right, so items naturally move later on the X-axis if they depend on earlier themes.

Human override remains simple

Dislike the order? Swap IDs in the JSON list or delete an arrow and regenerate the diagram—Mermaid updates instantly. The AI provides a plausible initial pass; your team validates feasibility and business relevance.

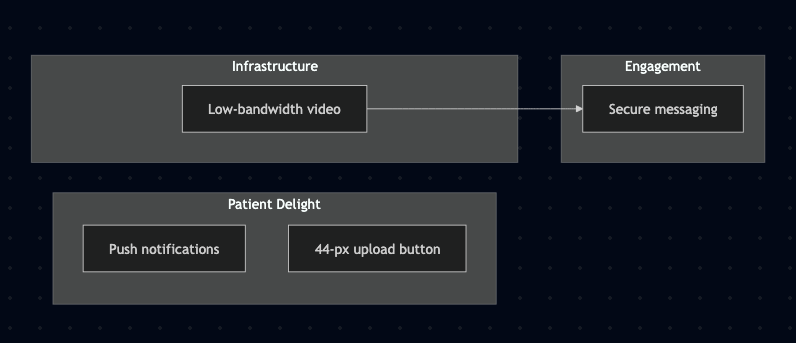

Sample Mermaid Output

graph LR

subgraph Patient Delight

MC-12[Push notifications]

MC-22[44-px upload button]

end

subgraph Infrastructure

MC-07[Low-bandwidth video]

end

subgraph Engagement

MC-35[Secure messaging]

end

MC-07 --> MC-35

How to read the Mermaid roadmap

The diagram is a bird’s-eye map of when and in what order backlog items should land:

Subgraphs (swim-lanes) group items by outcome theme, Patient Delight, Infrastructure, Engagement, and so on.

Nodes inside each subgraph show the story ID plus a shorthand summary. The items are listed top-to-bottom in the order we expect to tackle them.

Arrows point from a blocker to the item it unlocks. If you see

MC-07 ➔ MC-35, the low-bandwidth video work must finish before secure messaging can start.Left-to-right flow represents time: earlier work sits on the left edge; downstream stories drift right as their dependency chains lengthen.

Read it like a metro map, follow any node’s outgoing arrows to see what depends on it; trace incoming arrows to find what it’s waiting on.

One glance tells you where a theme starts, where it stops, and which technical spikes could bottleneck an entire quarter.

What Good Looks Like

When the roadmap process clicks, you’ll notice the difference right away: the very first workshop begins with a shared, dynamic diagram instead of a blank whiteboard. Dependencies are clearer; your ops spike clearly shows it blocks secure messaging, and the team can address risks while they’re still affordable.

Story groups align under quarters, executives understand sequencing at a glance, and GTM can already identify which launches need enablement. Most importantly, the visual updates as quickly as changes happen: change one tag, rerun the script, paste the new Mermaid block, and everyone’s view remains current. That is “good” in an AI-native backlog world, clarity in minutes, lasting alignment, and a roadmap that adapts with discovery rather than breaking under it.

Recap:

- We work as a team to identify the same dependencies within the first five minutes, minimal hidden blockers discovered mid-quarter.

- Roadmap workshop time decreases by 30%, because the skeleton is visible on-screen from the start.

- Confidence increases: execs understand why a theme moved, developers know which spike unblocks downstream work, and GTM team knows where to queue enablement.

AI laid out the initial framework; now the team adds context, challenges assumptions, and commits to progress. Use the picture to ask “What’s missing?” rather than “Where do we start?”