The experiment seemed like a sure thing.

The product team had been exploring ways to speed up definition-of-done checks, so they trained an AI to generate acceptance criteria for new user stories automatically. The demo was impressive, with clear sentences delivered in seconds. They deployed it into their refinement process and, within two sprints, the cracks started to show. The AI missed edge cases, misinterpreted technical dependencies, and produced vague criteria that led to rework.

By the third sprint, the team abandoned the tool… but in doing so, they realized the exercise had exposed inconsistencies in how they wrote acceptance criteria in the first place. That insight led to a new shared template, clearer refinement discussions, and their most considerable process improvement of the year.

What.

Mark Graban’s The Mistakes That Make Us reframes mistakes as essential raw material for learning, especially in complex systems like software delivery. Graban reminds us to reframe mistakes not as failures to be avoided but as crucial opportunities for learning and improvement. In an agile or Scrum context, this aligns directly with the inspect-and-adapt mindset; every sprint, experiment, or AI trial is a chance to uncover hidden gaps in data, processes, or assumptions.

His emphasis on creating a culture where errors are surfaced without fear supports psychological safety, enabling teams to address issues early and iterate faster. Applying these principles helps agile teams treat AI missteps as valuable feedback loops rather than setbacks, accelerating their ability to adapt and deliver value.

Consequently, Craig Larman’s emphasis on short, iterative learning loops gives teams a way to turn those mistakes into rapid improvement (while doing “real” work). We typically are aware of these things as coaches. Still, in practice, it’s just hard. AI experiments fit naturally here: each trial, whether it works or fails, is a feedback loop that teaches you something about your data quality, workflow, or assumptions.

The key is to see AI not as a one-and-done solution, but as an evolving collaborator that gets sharper with each cycle of “try, learn, adapt.”

So What.

The danger isn’t in AI failing, it’s in treating those failures as verdicts instead of clues. When an AI suggestion flops, it can signal:

Data gaps – The model can’t perform well without relevant, high-quality inputs.

Process misalignment – The tool doesn’t fit naturally into the team’s cadence or workflow.

Assumption errors – We believed something about our work that wasn’t true, and the AI made that visible.

If teams are too quick to discard AI after one bad trial, they lose the chance to uncover and fix these underlying issues. The real loss isn’t the failed experiment; it’s the missed learning.

Now What.

To make AI a sustainable part of agile delivery, embrace the “safe-to-fail” mindset:

Start small – Limit experiments to low-risk work so that failures are affordable.

Timebox trials – Give each experiment a clear start and end date to evaluate results quickly.

Reflect intentionally – Include AI experiments in retrospectives; ask “What did this teach us about our process?”

Scale selectively – Only expand an AI tool’s use after confirming it works in varied contexts.

When paired with agile’s iterative loops, this approach ensures that even a “failed” AI experiment accelerates your learning.

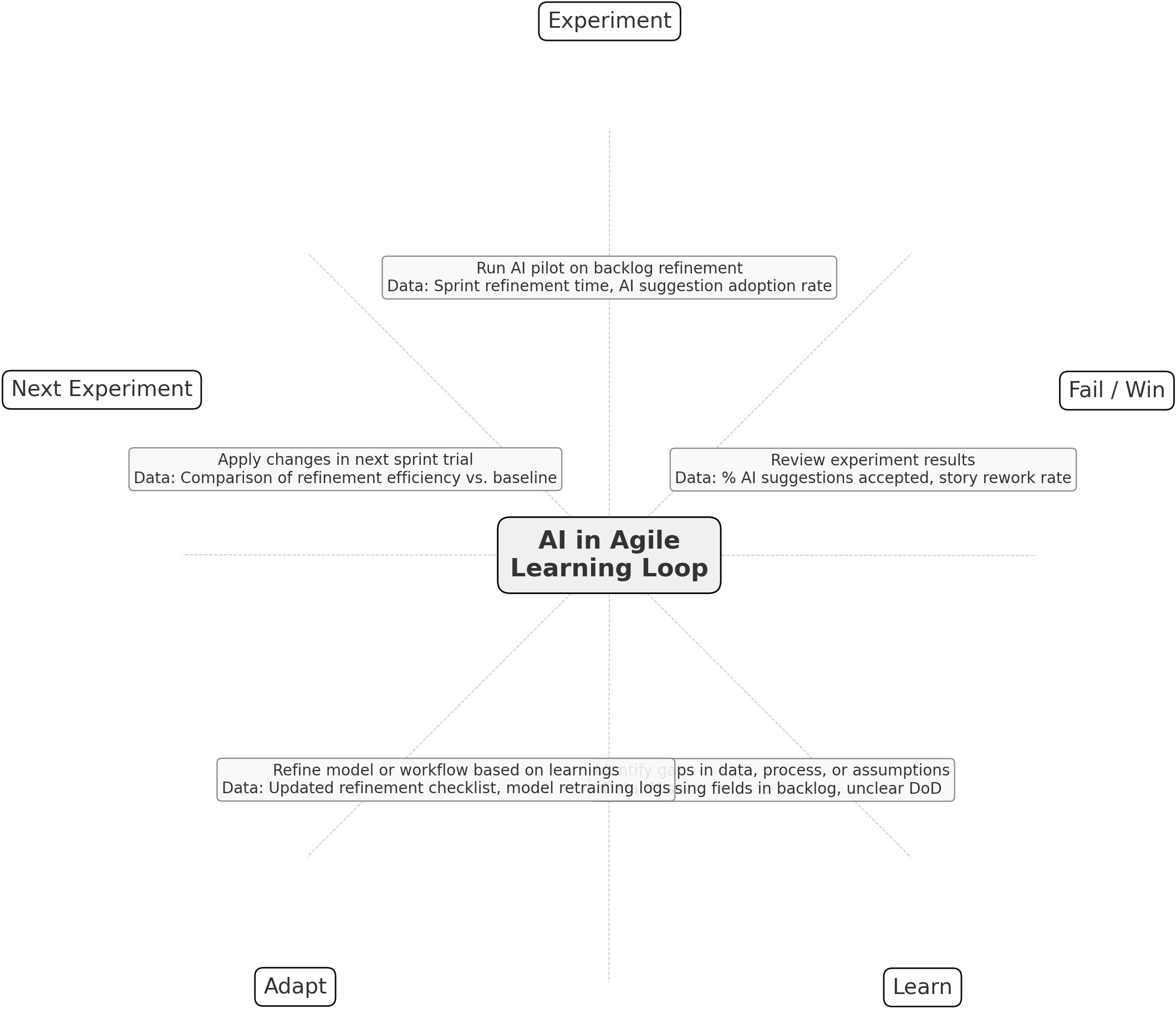

The loop above is a practical guide for running AI experiments in agile without letting failures derail progress. Start by running small, timeboxed experiments (“Experiment”) and collecting relevant metrics like adoption rates or time saved. Review outcomes to see where the AI helped and where it fell short (“Fail/Win”), then dig into the data to uncover gaps in process, information, or assumptions (“Learn”).

Adapt your model, workflow, or team agreements based on those insights (“Adapt”), and roll the changes into the next small-scale trial (“Next Experiment”). By repeating this cycle, you turn every AI trial into a safe-to-fail learning opportunity that steadily improves your team’s ability to deliver value.

Let's Do This!

An AI-enhanced agile team is not a perfect machine (we know that is what our business might prefer in the short-term), it’s a learning organism. Every failed experiment is a stress test of your assumptions, processes, and data. The value comes not from eliminating mistakes, but from making them quickly, safely, and visibly, then feeding those lessons back into the next sprint.

In the long run, the teams that win with AI won’t be the ones who avoid failure; they’ll be the ones who master the art of learning from it faster than anyone else.